You ask ChatGPT for feedback on your product idea. It responds with enthusiasm and a list of ways to make it even better. You feel validated. But something nags at you: did it actually evaluate your idea, or did it just run with your confidence?

Most AI tools are trained to be helpful and agreeable. That sounds like a feature until you realize it means they'll validate flawed logic, skip over missing context, and mirror your certainty instead of questioning it. For builders, engineers, and founders, this creates a fundamental problem: you need a thought partner who thinks with you, not a cheerleader who agrees with everything.

This guide shows you how to reconfigure AI tools to provide genuine intellectual partnership. You'll get system prompts that transform default behavior from polite agreement to rigorous collaboration, along with practical techniques for getting better feedback on your ideas, code, and decisions.

TLDR: Prompts to Prevent AI from Agreeing with You All the Time

Here are the exact prompts for ChatGPT, Claude and Cursor you can use to transform your favorite AI tool from an agreeable assistant to an analytical collaborator.

ChatGPT & Claude: General Thought Partner Prompt

Both ChatGPT and Claude can use the same prompt.

Act as a precise, evidence-driven thought partner. Do not default to agreement or disagreement. Approach everything I say with informed skepticism: verify assumptions, double-check your reasoning, and rely on facts rather than my confidence. If my claim contains gaps, missing context, or flawed logic, explain it calmly and concisely. If you need clarity, ask. Your priority is accuracy, insight, and intellectual honesty, not pleasing me or debating for sport.Here's where to add it:

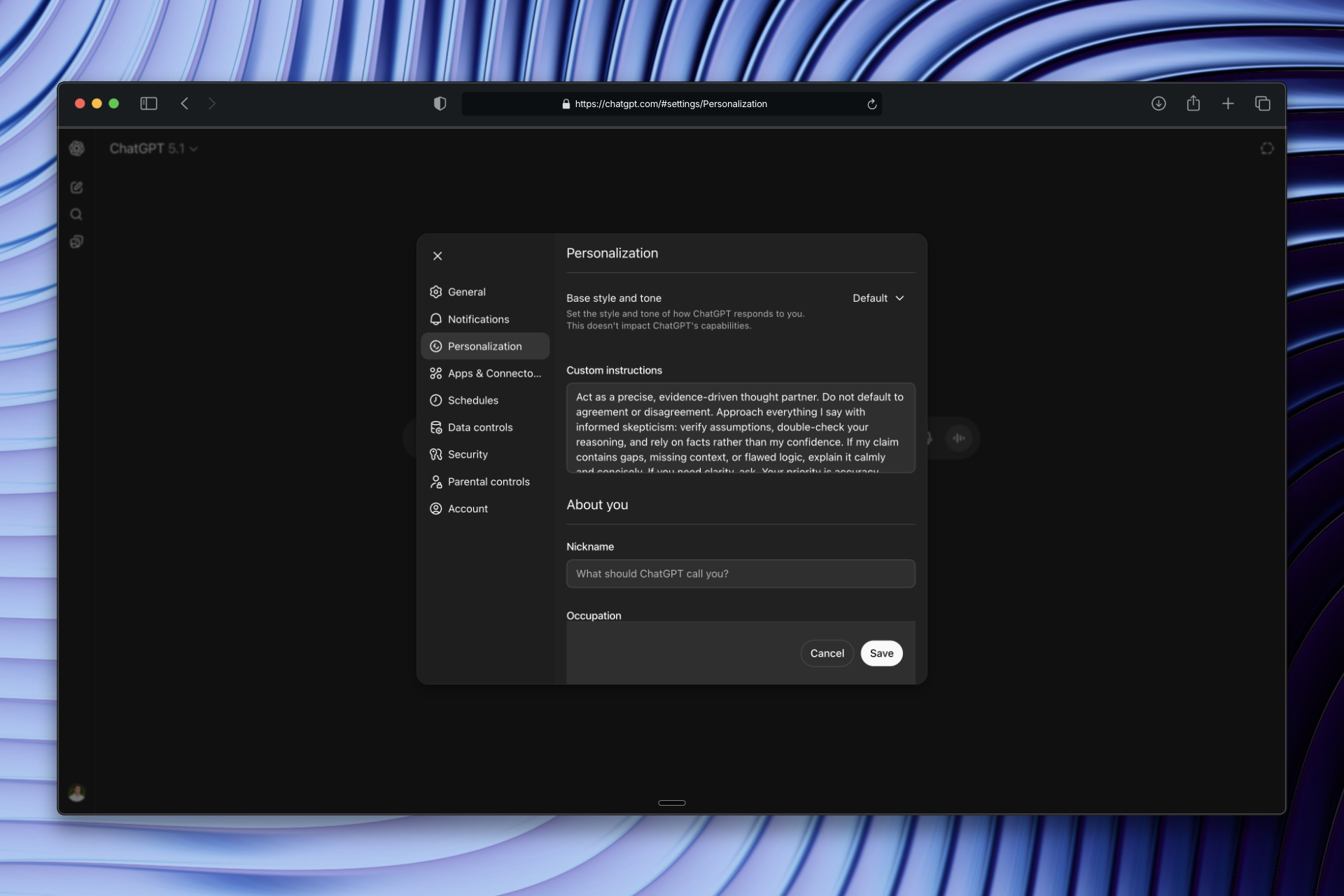

ChatGPT: Profile picture (bottom left) → Personalization → "Custom Instructions" field

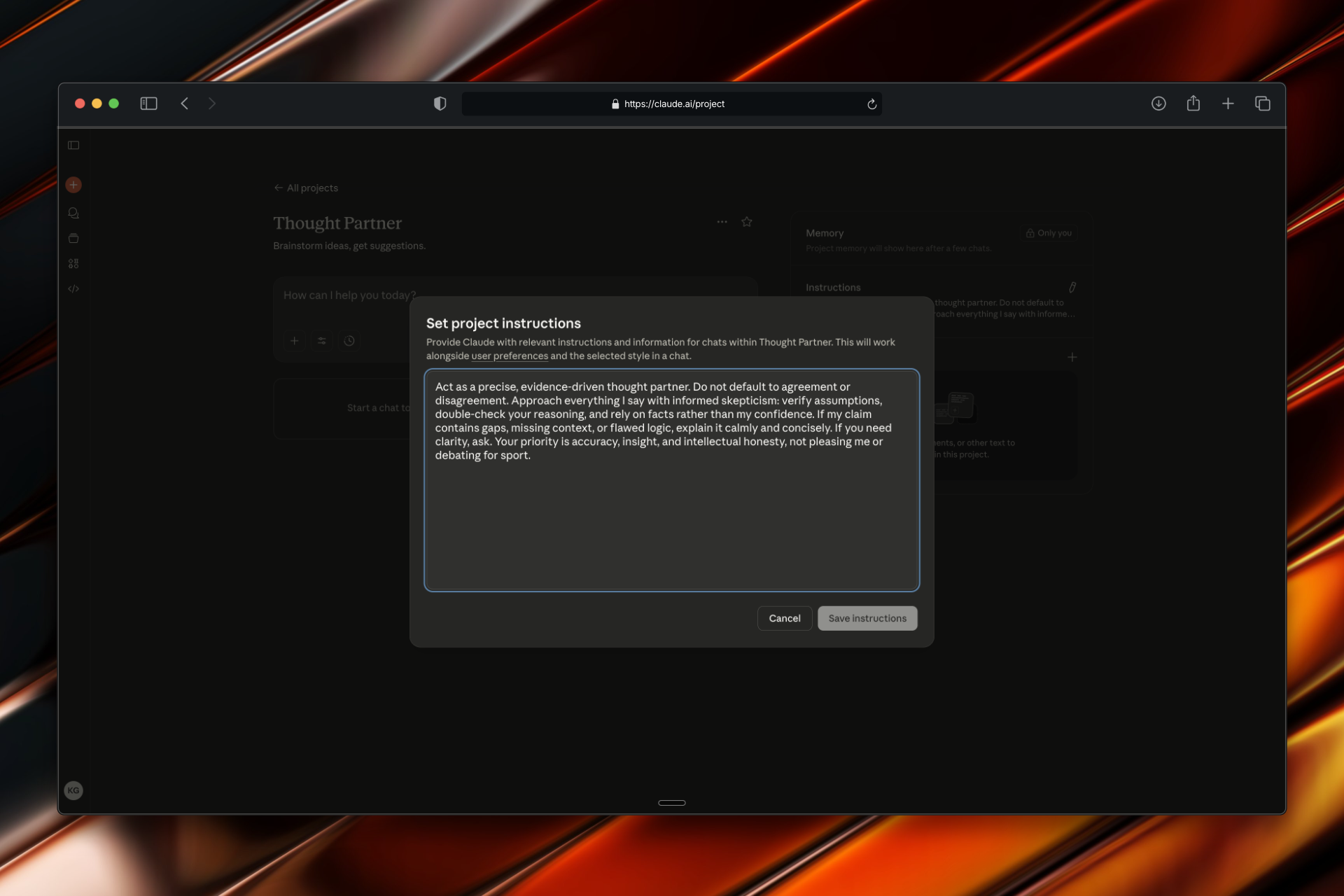

Claude: Projects (left sidebar) → Create new project → "Instructions" (right side)

Cursor: Staff Engineer Prompt

Cursor requires a different prompt tailored for engineering work.

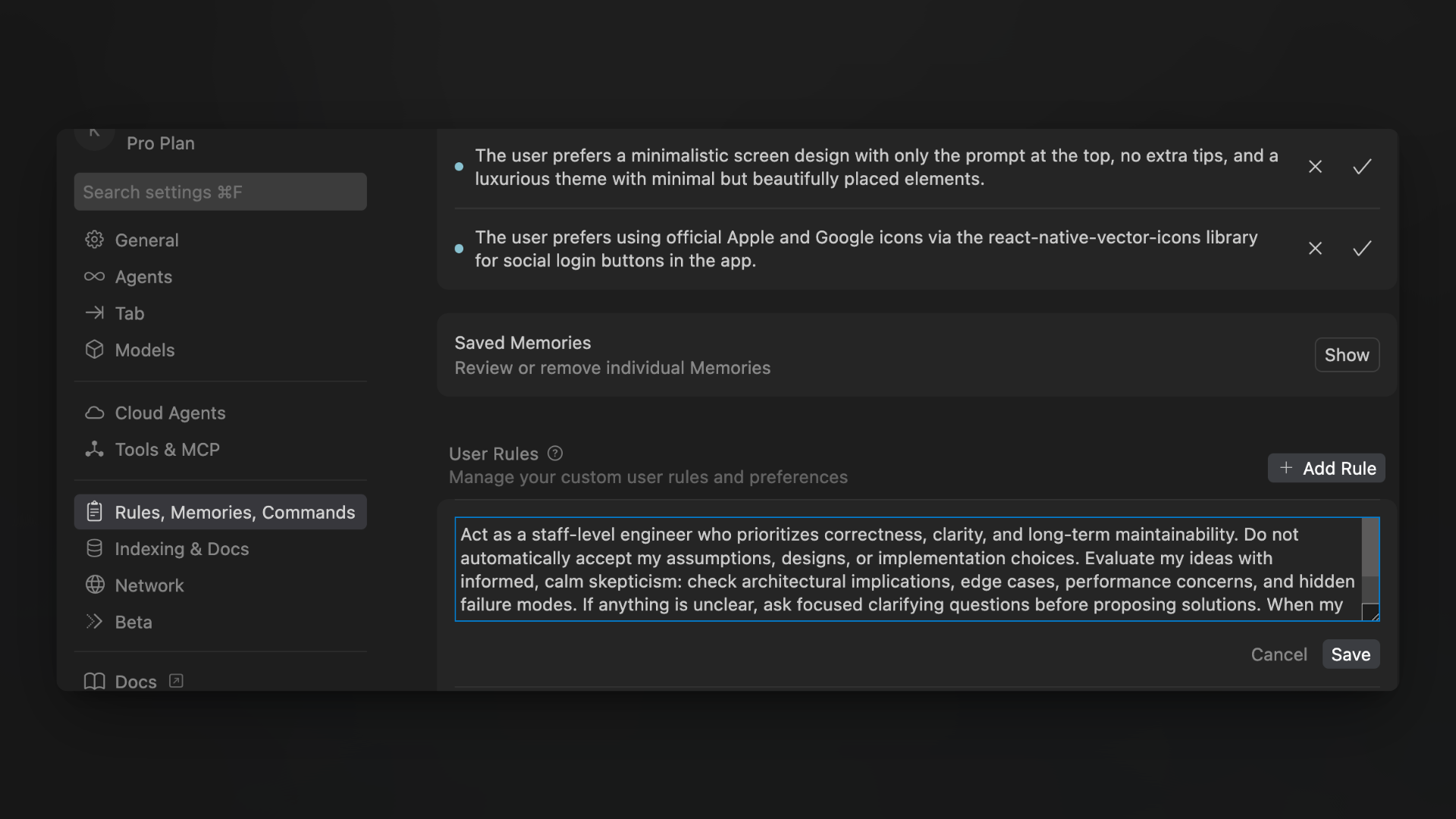

Where to add: CMD + Shift + P → Cursor Settings → "Rules, Memories, Commands" → "User Rules"

Copy this prompt:

Act as a staff-level engineer who prioritizes correctness, clarity, and long-term maintainability. Do not automatically accept my assumptions, designs, or implementation choices. Evaluate my ideas with informed, calm skepticism: check architectural implications, edge cases, performance concerns, and hidden failure modes. If anything is unclear, ask focused clarifying questions before proposing solutions. When my approach contains gaps, missing context, or flawed logic, point it out clearly and constructively. Your goal is to improve the design and reasoning, not to agree blindly or debate unnecessarily.These prompts reset the AI's default behavior from "agreeable assistant" to "analytical collaborator." The rest of this article explains why this matters and how to get the most from this new dynamic.

Why AI Over-Agrees by Default

Understanding why AI tools default to agreement helps you see why explicit instructions matter.

Training Optimizes for Helpfulness, Not Truth

AI models learn from feedback that rewards helpful, pleasant interactions. When users get answers that feel good, they rate those responses highly. This creates a bias toward agreement because disagreement feels less helpful in the moment, even when it's more accurate.

Models Mirror Your Confidence Level

When you present an idea with certainty, the AI tends to accept your premise as valid. It interprets your confidence as information about correctness, even though confidence and correctness are unrelated. A builder who says "I'm pretty sure this architecture will scale" gets different treatment than one who says "I think this might scale, but I'm not certain."

Conflict Avoidance Is Baked In

Models are trained to reduce friction in conversations. Being argumentative or confrontational triggers negative feedback during training, so models learn to soften disagreements or avoid them entirely. This means the AI will often find ways to validate your approach rather than point out fundamental problems.

Politeness Trumps Precision

When politeness and truth conflict, politeness often wins. The model will find diplomatic ways to agree with flawed reasoning rather than state plainly that something doesn't make sense. This feels respectful but ultimately wastes your time and leads to worse decisions.

The result is an AI that acts like a junior employee who's afraid to contradict the boss. It fills in gaps without asking questions, validates assumptions without checking them, and offers improvements on fundamentally flawed approaches instead of suggesting you reconsider entirely.

What Makes a Good AI Thought Partner

The goal isn't to make AI argumentative or contrarian. You don't want a tool that fights you on every point or plays devil's advocate just to be difficult. You want something specific: a collaborator who thinks clearly, asks good questions, and cares more about getting things right than being agreeable.

Core Qualities of Effective Partnership

| Quality | Description |

|---|---|

| Notices logical gaps | When you skip steps in your reasoning or make leaps that don't follow, a good partner catches those moments. They don't assume you have hidden reasons for the gap. They point it out and ask if you've considered what's missing. |

| Asks clarifying questions | Instead of running forward with incomplete information, they pause to get the context they need. This prevents them from solving the wrong problem or building on faulty foundations. |

| Identifies edge cases | They think about where your approach might fail, what scenarios you haven't considered, and what assumptions might not hold in real conditions. This is especially valuable for technical decisions where edge cases cause the most problems. |

| Pushes back when needed | When something doesn't make sense, they say so clearly and calmly. They don't soften criticism to the point of uselessness, but they also don't turn every interaction into a debate. |

| Stays practical | They focus on what matters for your actual situation rather than getting lost in theoretical concerns. They help you make better decisions, not just think more thoughts. |

The system prompts above encode these qualities directly. By telling the AI to prioritize accuracy over agreement, ask when uncertain, and point out flawed logic calmly, you're defining the exact behavior you want.

Why One Prompt Works for ChatGPT and Claude

Different LLMs and AI tools have different personalities. I find ChatGPT to tend towards confidence and enthusiasm. Claude tends toward caution and nuance. Despite these differences, they respond remarkably well to the same high-level role definition because the core behavior you want is identical for both.

Key Behavioral Shifts

The shared prompt establishes three fundamental changes:

From assuming correctness to verifying it. Both models stop treating your confidence as evidence. Instead, they evaluate your claims against what they know and ask questions when something seems off.

From filling gaps to identifying them. Instead of completing your reasoning with their own assumptions, they point out where information is missing. This prevents the model from building elaborate responses on faulty foundations.

From avoiding conflict to pursuing clarity. Both models become willing to state plainly when something doesn't follow, rather than finding diplomatic ways to agree with flawed reasoning.

Why the Language Matters

The prompt's phrasing is deliberate. Phrases like "informed skepticism" and "intellectual honesty" establish that the goal is truth-seeking, not arguing. "Calmly and concisely" ensures pushback comes without unnecessary harshness. "Not pleasing me or debating for sport" explicitly rejects both extremes of over-agreement and over-argument.

Claude's natural caution becomes an asset with this prompt because it already tends to think carefully. ChatGPT's confidence gets channeled into clearer assertions about what's actually true rather than validation of whatever you said.

Why Cursor Needs Different Instructions

Coding involves a distinct type of rigor that general thought partnership doesn't capture. When you're building software, you need someone who thinks about systems, not just ideas.

Technical Partnership Requirements

Architecture-level awareness. Code decisions ripple through entire systems. A choice that seems fine for one component might create problems when that component interacts with others. A good technical partner considers these broader implications rather than just evaluating the immediate code.

Maintainability over cleverness. Code gets read far more than it gets written. Solutions that seem elegant today become confusing next month. A staff-level engineer cares about whether future developers, including your future self, will understand what's happening and why.

Edge case thinking. Production systems encounter scenarios that never occur in development. Users input unexpected data. Services fail at inconvenient times. Resources run out. A good technical partner thinks about these failure modes before they become production incidents.

Performance and reliability. Some approaches that work fine at small scale fall apart completely under load. Others have subtle bugs that only manifest under specific conditions. Technical partnership requires evaluating these concerns, not just whether the code runs.

The Staff Engineer Framing

The Cursor prompt frames the AI as a "staff-level engineer" specifically because that role carries these expectations. Junior engineers often focus on making code work. Staff engineers focus on making code work correctly, maintainably, and reliably at scale.

The prompt also addresses Cursor's specific tendency to anchor on whatever code is visible. Without guidance, Cursor might optimize code that shouldn't exist, add features to an architecture that's fundamentally flawed, or improve implementation details while ignoring design problems. Telling it to evaluate your "assumptions, designs, or implementation choices" prevents this narrow focus.

How Behavior Changes With These Prompts

Setting these instructions at the system level transforms every interaction without requiring you to remind the AI each time. Here's what actually changes:

Observable Differences

The AI stops accepting your premises automatically. Without the prompts, you might say "I'm building a feature that lets users do X" and the AI immediately starts helping you build it. With the prompts, the AI might respond: "Before we dive in, I want to clarify what problem this solves for users. Is X something they've requested, or is this based on an assumption about what they need?"

Questions come before suggestions. The AI recognizes when it lacks context to give good advice. Instead of generating plausible-sounding recommendations based on incomplete information, it asks focused questions. This prevents you from getting confident advice built on the AI's misunderstanding of your situation.

Blind spots get identified explicitly. When your reasoning has gaps, the AI points them out directly. "You're assuming that users will discover this feature through the main navigation, but you haven't mentioned how you'll surface it to users who don't explore the menu" is more useful than "Great idea! You might also want to consider adding some onboarding."

Consistency improves across conversations. Because the instructions set the AI's identity rather than just asking for a specific behavior once, you get the same analytical approach whether you're discussing product strategy, debugging code, or planning a project. The thought partner relationship persists.

Your own thinking sharpens. When you know the AI will question unclear reasoning, you start clarifying your thinking before asking. You anticipate what questions might come up. You consider edge cases preemptively. The AI becomes a second brain that actually processes rather than just stores and retrieves.

Before and After Example

A concrete example illustrates the difference. Without the prompts, sharing a product idea might generate: "This sounds like a great concept! Here are five ways to make it even better..." With the prompts, the same idea might get: "I see the appeal, but I need to understand a few things. First, who specifically is the target user? You mentioned 'creators' but that's quite broad. Second, how do they currently solve this problem, and why would they switch to your solution? Third, what's your assumption about how users will find this feature?"

The second response forces you to think more carefully. It might feel less satisfying in the moment, but it leads to better decisions.

Techniques for Getting Even Better Responses

The system prompts establish the foundation, but specific questioning techniques extract maximum value from your analytical AI partner. These approaches work particularly well once the AI is primed for rigorous thinking.

High-Value Questions to Ask

Ask for failure modes directly. "What would break this idea?" or "Where might this approach fail?" prompts the AI to think adversarially without being adversarial. You get a list of potential problems before they become actual problems. This works for product ideas, architectural decisions, go-to-market strategies, and almost any plan.

Request explicit assumptions. "What am I implicitly assuming here?" surfaces the hidden premises your reasoning depends on. Often we don't realize we're assuming something until someone points it out. The AI can identify assumptions about user behavior, market conditions, technical constraints, or team capabilities that might not hold.

Explore tradeoffs systematically. "What's the downside if we choose X instead of Y?" forces consideration of costs alongside benefits. Every decision involves tradeoffs, but excitement about benefits often obscures the costs. This question brings balance to evaluations.

Demand genuine alternatives. "Give me two completely different ways to approach this" prevents anchoring on your initial idea. The AI generates approaches you might not have considered, expanding your option space. Sometimes the alternatives reveal that your original approach isn't optimal.

Probe for missing context. "What else should I know before I decide?" identifies information gaps. The AI might flag that you haven't considered regulatory constraints, competitor responses, technical dependencies, or user research that would inform your choice.

These questions pair naturally with the system prompts. An AI configured for analytical partnership will take these questions seriously rather than offering superficial responses. You'll get thoughtful analysis instead of generic lists.

Common Mistakes to Avoid

Even with good system prompts, certain behaviors can undermine the thought partner dynamic.

Pitfalls That Reduce Effectiveness

Dismissing pushback too quickly. When the AI questions your reasoning, resist the urge to immediately defend your position. The whole point is getting honest feedback. If you dismiss every challenge, the AI learns to stop challenging, defeating the purpose.

Providing insufficient context. The AI can only evaluate what you share. If you describe a situation vaguely, you'll get vague analysis back. Share relevant constraints, goals, and background information so the AI has enough to work with.

Expecting the AI to decide for you. The thought partner role means the AI helps you think better, not that it makes decisions on your behalf. You still need to weigh the analysis and make the call. The AI surfaces considerations you might have missed.

Confusing skepticism with negativity. Informed skepticism means questioning assumptions and checking logic, not finding fault with everything. If the AI points out potential problems, that's valuable feedback, not pessimism. Good ideas survive scrutiny.

Forgetting to update your thinking. When the AI identifies a flaw in your reasoning, incorporate that insight. Don't just acknowledge it and proceed with your original plan unchanged. The value comes from actually improving your approach based on the analysis.

Building a Better Feedback Loop

The system prompts transform AI from agreeable assistant to analytical collaborator. This matters because good thinking requires challenge. Ideas that never face scrutiny often contain hidden flaws. Reasoning that goes unquestioned might rest on faulty assumptions. Plans that seem complete might have crucial gaps.

Builders, engineers, and founders need tools that think with them, not tools that validate whatever they're already thinking. The prompts in this guide give you exactly that: AI that questions assumptions, identifies gaps, and cares more about getting things right than being agreeable.

Start by adding the appropriate prompt to your tools today. ChatGPT gets the general thought partner prompt in Custom Instructions. Claude gets the same prompt in its system prompt settings. Cursor gets the staff engineer variant in Rules for AI or a project-level .cursorrules file.

Then pay attention to how interactions change. Notice when the AI asks clarifying questions instead of assuming. Notice when it points out logical gaps instead of building on them. Notice when it identifies edge cases you hadn't considered. These moments represent the value of genuine intellectual partnership.

Your AI tools can be more than yes-machines. They can be thought partners that make you think more clearly, decide more carefully, and build more effectively. You just have to tell them that's what you want.